The Problem

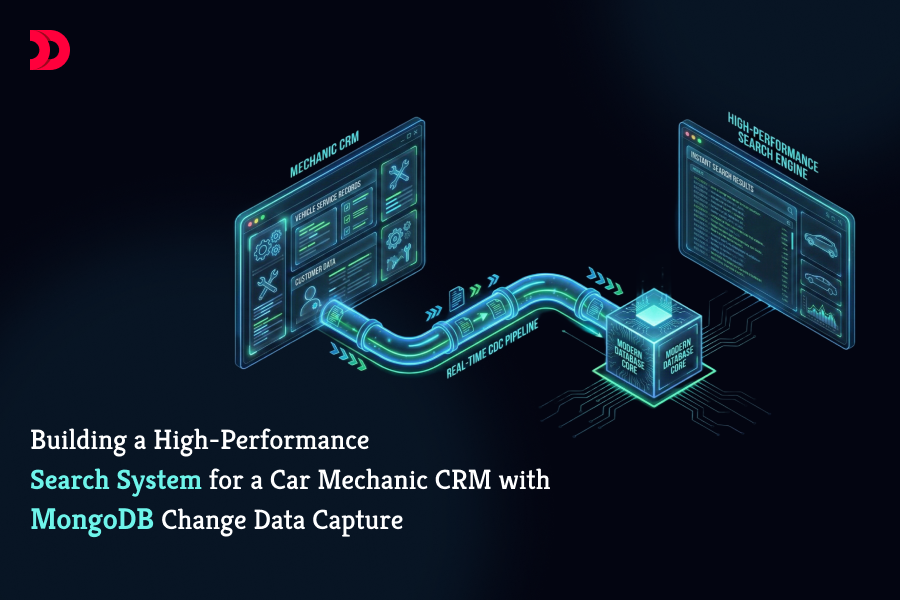

In our car mechanic CRM application, users needed to search across multiple entities simultaneously-customers, their vehicles, appointment history, and service records. However, our data architecture presented a significant challenge.

The Data Architecture Challenge

Our application followed database normalization best practices. Data was organized into separate collections:

- Users collection – Customer information

- Appointments collection – Service appointment records

- Vehicles collection – Vehicle details with references to master data collections

While this normalized structure kept our data clean and avoided redundancy, it created a performance bottleneck for search operations. To execute a single search query, we needed to perform 2-3 layers of lookups across collections, followed by text regexp searches. The result? Unacceptably slow search performance that degraded user experience.

Our Solution: Denormalized Search Index

We implemented a centralized search solution using a dedicated collection called userSearch. This collection stores denormalized data in string format, combining:

- User information

- All associated vehicles

- Last appointment data

- Relevant service history

By leveraging MongoDB Atlas Search, we created indexes that could query this consolidated data efficiently, dramatically improving search performance.

The Real Challenge: Data Synchronization

Creating the search index was straightforward. The difficult part was ensuring data consistency. Our userSearch collection needed to remain synchronized with source data across 4-5 different collections, with updates reflected within 5 seconds to maintain a smooth user experience.

This is where things got interesting.

Implementing Change Data Capture (CDC)

We built a CDC pipeline using MongoDB Change Streams to monitor data changes in real-time. Here’s how it worked:

- Change streams were set up for all collections containing user-related data

- Our Node.js/Express application subscribed to these streams

- When changes occurred, our CDC subscribers processed the events and updated the search index accordingly

This architecture kept our search index eventually consistent with the source data, typically updating within seconds of any change.

The Silent Failure Problem

Just when we thought we had solved the problem, we discovered a critical issue: change streams would randomly stop working.

The frustrating part? They failed silently-no errors, no warnings, nothing. Our CDC pipeline would simply stop processing updates without any indication that something was wrong.

Root Cause Analysis

After carefully examining the Atlas console logs and monitoring server behavior, we identified the culprit: server re-election events. Whenever MongoDB’s replica set elected a new primary server, our change streams would terminate without throwing any exceptions.

The Heartbeat Solution

To detect and recover from these silent failures, we implemented a heartbeat mechanism:

How It Works

- Heartbeat Documents – A background cron job runs every 5 minutes, inserting a special document flagged as a heartbeat into the database

- CDC Validation – Our CDC subscribers watch for these heartbeat documents. When detected, they immediately delete them

- Failure Detection – The cron job checks for the presence of heartbeat documents with the flag. If any exist, it means the CDC subscriber failed to delete them, indicating the CDC pipeline has stopped

- Automatic Recovery – When a stopped CDC is detected, the system automatically restarts the change stream subscriptions

This mechanism ensured our CDC pipeline would self-heal, but introduced a new concern: what about the updates that occurred during those 5 minutes of downtime?

Preventing Data Loss with Resume Tokens

MongoDB Change Streams provide a powerful feature called resume tokens-essentially bookmarks that mark specific points in the change stream.

Our Implementation

- After processing each CDC event, we write the resume token to a file

- When reconnecting after a failure, we use the last saved token to resume from exactly where we left off

- This ensures no database updates are lost, even during CDC downtime

The file-based approach gives us persistence across application restarts and provides a simple recovery mechanism.

The Complete System

Our final architecture combines multiple components working in harmony:

Search Layer

- Denormalized userSearch collection

- Atlas Search indexes for fast querying

Synchronization Layer

- CDC pipelines monitoring 4-5 source collections

- Real-time updates to search index

Reliability Layer

- Heartbeat monitoring every 5 minutes

- Automatic CDC restart on failure detection

- Resume token persistence for zero data loss

Technical Stack

- Backend: Node.js with Express (monolithic architecture)

- Database: MongoDB Atlas

- Search: Atlas Search with custom indexes

- CDC: MongoDB Change Streams

- Monitoring: Custom heartbeat mechanism with cron jobs

Results

This architecture delivers:

- Fast search performance – Sub-second queries across all user, vehicle, and appointment data

- Data consistency – Updates reflected in search within 5 seconds under normal operation

- Fault tolerance – Automatic recovery from change stream failures

- Zero data loss – Resume tokens ensure no updates are missed during reconnection

Lessons Learned

- Denormalization has its place – While normalization is important for data integrity, strategic denormalization can dramatically improve read performance

- Monitor your monitoring – Change streams are powerful but can fail silently. Always implement health checks for critical infrastructure

- Design for failure – Resume tokens and heartbeat mechanisms might seem like overkill, but they’re essential for production reliability

- Eventually consistent is often good enough – A 5-second buffer for search index updates provides excellent user experience while allowing for robust error recovery

Conclusion

Building a high-performance search system for normalized data requires thinking beyond traditional query optimization. By combining denormalized search indexes, change data capture, and fault-tolerant synchronization mechanisms, we created a system that delivers both speed and reliability.

The key insight is that complex systems require defense in depth-not just the primary mechanism (CDC), but also monitoring (heartbeat), recovery (automatic restart), and data integrity guarantees (resume tokens). Each layer addresses a different failure mode, resulting in a system that’s greater than the sum of its parts.

Jan 08, 2026

Jan 08, 2026

13 Views

13 Views